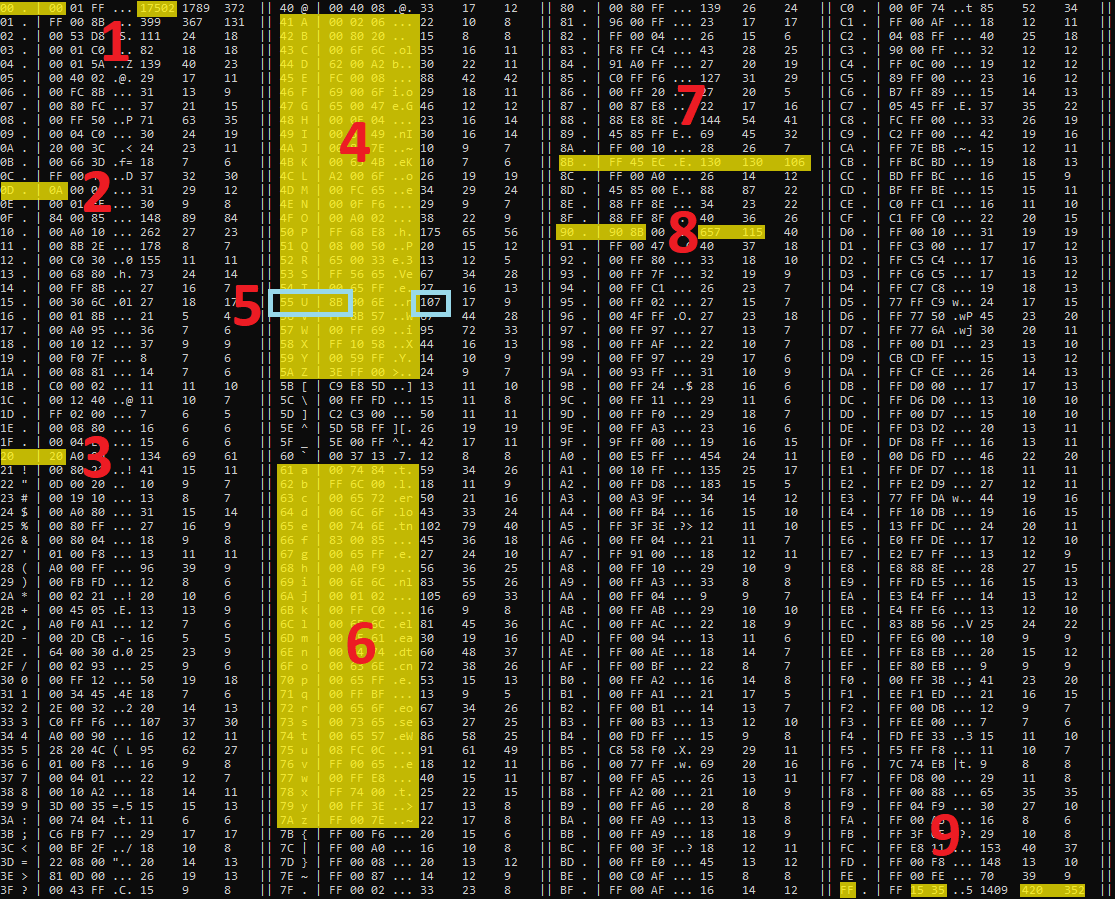

HexLasso has a functionality called peek which allows the user to peek at the most distinct parts of the given file.

The result is a series of hexdumps of 0x80 bytes. Reason is provided for each hexdump how it is distinct to other hexdumps.

Below is the result of the peek functionality on an executable file which has a size of 151040 bytes.

Reason: PredictedByte Coverage: 31

00000E80 B6 05 D6 A1 00 01 50 68 7C 18 00 01 FF 75 FC E8 ......Ph|....u..

00000E90 BD 01 00 00 0F B6 05 D7 A1 00 01 50 68 68 18 00 ...........Phh..

00000EA0 01 FF 75 FC E8 A8 01 00 00 0F B6 05 D8 A1 00 01 ..u.............

00000EB0 50 68 48 18 00 01 FF 75 FC E8 93 01 00 00 0F B6 PhH....u........

00000EC0 05 D9 A1 00 01 50 68 28 18 00 01 FF 75 FC E8 7E .....Ph(....u..~

00000ED0 01 00 00 0F B6 05 DA A1 00 01 50 68 14 18 00 01 ..........Ph....

00000EE0 FF 75 FC E8 69 01 00 00 0F B6 05 DB A1 00 01 50 .u..i..........P

00000EF0 68 F0 17 00 01 FF 75 FC E8 54 01 00 00 FF 35 04 h.....u..T....5.

Reason: QWordMatch Coverage: 39

00002F00 FE FF FF FF 41 4F 00 01 4A 4F 00 01 68 33 89 00 ....AO..JO..h3..

00002F10 01 64 FF 35 00 00 00 00 8B 44 24 10 89 6C 24 10 .d.5.....D$..l$.

00002F20 8D 6C 24 10 2B E0 53 56 57 A1 10 A0 00 01 31 45 .l$.+.SVW.....1E

00002F30 FC 33 C5 50 89 65 E8 FF 75 F8 8B 45 FC C7 45 FC .3.P.e..u..E..E.

00002F40 FE FF FF FF 89 45 F8 8D 45 F0 64 A3 00 00 00 00 .....E..E.d.....

00002F50 C3 50 FF D6 E9 6E E1 FF FF 50 FF D6 E9 73 E1 FF .P...n...P...s..

00002F60 FF B8 C4 09 00 00 A3 30 A1 00 01 A3 38 A1 00 01 .......0....8...

00002F70 C7 05 10 A1 00 01 0A A0 00 00 B8 D0 07 00 00 E9 ................

Reason: UnicodeString Coverage: 7E

00004E00 6D 00 73 00 68 00 65 00 6C 00 70 00 3A 00 2F 00 m.s.h.e.l.p.:./.

00004E10 2F 00 77 00 69 00 6E 00 64 00 6F 00 77 00 73 00 /.w.i.n.d.o.w.s.

00004E20 2F 00 3F 00 69 00 64 00 3D 00 35 00 64 00 31 00 /.?.i.d.=.5.d.1.

00004E30 38 00 64 00 35 00 66 00 62 00 2D 00 65 00 37 00 8.d.5.f.b.-.e.7.

00004E40 33 00 37 00 2D 00 34 00 61 00 37 00 33 00 2D 00 3.7.-.4.a.7.3.-.

00004E50 62 00 36 00 63 00 63 00 2D 00 64 00 63 00 63 00 b.6.c.c.-.d.c.c.

00004E60 63 00 36 00 33 00 37 00 32 00 30 00 32 00 33 00 c.6.3.7.2.0.2.3.

00004E70 31 00 00 00 48 00 45 00 4C 00 50 00 5F 00 45 00 1...H.E.L.P._.E.

Reason: DWordMatch Coverage: 3A

00006F00 DC FD FF FF 50 FF 15 AC 12 00 01 59 59 0F B7 0B ....P......YY...

00006F10 66 85 C9 BE 04 01 00 00 0F 84 85 01 00 00 A1 7C f..............|

00006F20 A2 00 01 66 83 F9 26 74 46 8B 94 BD E0 FD FF FF ...f..&tF.......

00006F30 83 85 F0 FD FF FF 02 8B DF 69 DB 04 01 00 00 03 .........i......

00006F40 DA 66 89 0C 5D 20 B7 00 01 8B 8D F0 FD FF FF 0F .f..] ..........

00006F50 B7 09 8B 9D F0 FD FF FF 42 66 85 C9 89 94 BD E0 ........Bf......

00006F60 FD FF FF 75 BE 66 83 3B 26 0F 85 28 01 00 00 6A ...u.f.;&..(...j

00006F70 02 5A 03 DA 0F B7 0B 66 3B 08 89 9D F0 FD FF FF .Z.....f;.......

Reason: X86Fragment Coverage: 5B

00007980 38 FF 15 38 11 00 01 EB 46 33 F6 6A 30 FF 35 4C 8..8....F3.j0.5L

00007990 A2 00 01 FF 35 A0 A2 00 01 FF 35 20 A0 00 01 FF ....5.....5 ....

000079A0 15 30 12 00 01 56 FF 15 64 10 00 01 FF 15 6C 10 .0...V..d.....l.

000079B0 00 01 8B F8 E9 54 FF FF FF FF 15 3C 11 00 01 85 .....T.....<....

000079C0 C0 7F 0C 3B FE 75 08 FF 15 6C 10 00 01 8B F8 FF ...;.u...l......

000079D0 B5 C8 FC FF FF FF 15 40 11 00 01 E8 9A F1 FF FF .......@........

000079E0 FF 35 88 A0 00 01 FF 15 74 11 00 01 39 35 54 BD .5......t...95T.

000079F0 00 01 75 04 8B C7 EB 03 6A FD 58 8B 4D FC 5F 5E ..u.....j.X.M._^

Reason: SpSameByteDiffSeq Coverage: 60

00008380 B4 98 00 00 CA 98 00 00 DA 98 00 00 E8 98 00 00 ................

00008390 FA 98 00 00 0A 99 00 00 1A 99 00 00 28 99 00 00 ............(...

000083A0 3E 99 00 00 50 99 00 00 5E 99 00 00 70 99 00 00 >...P...^...p...

000083B0 80 99 00 00 8C 99 00 00 9C 99 00 00 AE 99 00 00 ................

000083C0 CC 99 00 00 DC 99 00 00 EC 99 00 00 FE 99 00 00 ................

000083D0 0C 9A 00 00 16 9A 00 00 28 9A 00 00 36 9A 00 00 ........(...6...

000083E0 46 9A 00 00 54 9A 00 00 60 9A 00 00 6C 9A 00 00 F...T...`...l...

000083F0 7C 9A 00 00 88 9A 00 00 A0 9A 00 00 AC 9A 00 00 |...............

Reason: ByteMulOf4 Coverage: 0D

0000A700 00 00 00 00 00 00 00 00 88 F2 00 00 A8 08 00 00 ................

0000A710 00 00 00 00 00 00 00 00 30 FB 00 00 C8 06 00 00 ........0.......

0000A720 00 00 00 00 00 00 00 00 F8 01 01 00 68 05 00 00 ............h...

0000A730 00 00 00 00 00 00 00 00 60 07 01 00 58 19 01 00 ........`...X...

0000A740 00 00 00 00 00 00 00 00 B8 20 02 00 A8 25 00 00 ......... ...%..

0000A750 00 00 00 00 00 00 00 00 60 46 02 00 A8 10 00 00 ........`F......

0000A760 00 00 00 00 00 00 00 00 08 57 02 00 88 09 00 00 .........W......

0000A770 00 00 00 00 00 00 00 00 90 60 02 00 68 04 00 00 .........`..h...

Reason: AsciiString Coverage: 78

0000A800 70 79 72 69 67 68 74 20 28 63 29 20 4D 69 63 72 pyright (c) Micr

0000A810 6F 73 6F 66 74 20 43 6F 72 70 6F 72 61 74 69 6F osoft Corporatio

0000A820 6E 20 2D 2D 3E 0D 0A 3C 61 73 73 65 6D 62 6C 79 n -->..<assembly

0000A830 20 78 6D 6C 6E 73 3D 22 75 72 6E 3A 73 63 68 65 xmlns="urn:sche

0000A840 6D 61 73 2D 6D 69 63 72 6F 73 6F 66 74 2D 63 6F mas-microsoft-co

0000A850 6D 3A 61 73 6D 2E 76 31 22 20 6D 61 6E 69 66 65 m:asm.v1" manife

0000A860 73 74 56 65 72 73 69 6F 6E 3D 22 31 2E 30 22 3E stVersion="1.0">

0000A870 0D 0A 3C 61 73 73 65 6D 62 6C 79 49 64 65 6E 74 ..<assemblyIdent

Reason: SameAsciiByteSeq Coverage: 25

0000A980 22 0D 0A 20 20 20 20 20 20 20 20 20 20 20 20 6E ".. n

0000A990 61 6D 65 3D 22 4D 69 63 72 6F 73 6F 66 74 2E 57 ame="Microsoft.W

0000A9A0 69 6E 64 6F 77 73 2E 43 6F 6D 6D 6F 6E 2D 43 6F indows.Common-Co

0000A9B0 6E 74 72 6F 6C 73 22 0D 0A 20 20 20 20 20 20 20 ntrols"..

0000A9C0 20 20 20 20 20 76 65 72 73 69 6F 6E 3D 22 36 2E version="6.

0000A9D0 30 2E 30 2E 30 22 0D 0A 20 20 20 20 20 20 20 20 0.0.0"..

0000A9E0 20 20 20 20 70 72 6F 63 65 73 73 6F 72 41 72 63 processorArc

0000A9F0 68 69 74 65 63 74 75 72 65 3D 22 2A 22 0D 0A 20 hitecture="*"..

Reason: SameByteDiffSeq Coverage: 08

0000AB80 28 00 00 00 30 00 00 00 60 00 00 00 01 00 04 00 (...0...`.......

0000AB90 00 00 00 00 80 04 00 00 00 00 00 00 00 00 00 00 ................

0000ABA0 00 00 00 00 00 00 00 00 00 00 00 00 00 00 80 00 ................

0000ABB0 00 80 00 00 00 80 80 00 80 00 00 00 80 00 80 00 ................

0000ABC0 80 80 00 00 80 80 80 00 C0 C0 C0 00 00 00 FF 00 ................

0000ABD0 00 FF 00 00 00 FF FF 00 FF 00 00 00 FF 00 FF 00 ................

0000ABE0 FF FF 00 00 FF FF FF 00 00 00 00 00 00 00 00 00 ................

0000ABF0 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 ................

Reason: SpByteMulOf4 Coverage: 15

0000AE00 E8 88 E8 88 78 E7 87 E8 88 8F 88 F7 87 21 00 00 ....x........!..

0000AE10 00 00 00 88 8E 88 E8 88 88 78 87 E8 E8 88 E8 8C .........x......

0000AE20 8E 88 8F 88 E7 13 00 00 00 00 00 88 88 88 88 88 ................

0000AE30 E8 8E 88 88 88 E8 78 E8 87 8F F8 F8 77 13 00 00 ......x.....w...

0000AE40 00 00 00 08 E8 88 88 88 88 88 8E 88 E8 88 E8 8E ................

0000AE50 7E 78 88 88 87 30 00 00 00 00 00 08 F8 88 8E FE ~x...0..........

0000AE60 88 E8 88 88 88 E8 87 87 88 E8 F8 F8 77 73 00 00 ............ws..

0000AE70 00 00 00 00 88 88 8F 88 88 88 88 E8 88 87 8E 8E ................

Reason: SpSameByteSeq Coverage: 28

0000B700 C0 C0 C0 00 00 00 FF 00 00 FF 00 00 00 FF FF 00 ................

0000B710 FF 00 00 00 FF 00 FF 00 FF FF 00 00 FF FF FF 00 ................

0000B720 00 00 87 77 77 77 77 78 00 00 8F F8 FF FF FF F3 ...wwwwx........

0000B730 08 88 88 88 88 87 88 F7 08 88 E8 8E 8E 8E 7F F7 ................

0000B740 08 88 F8 88 88 88 E8 F7 08 88 E8 8E 8E 87 88 F7 ................

0000B750 08 8F F8 8F 88 E8 E8 F7 00 88 88 E8 88 88 78 87 ..............x.

0000B760 00 88 88 F8 8E 8E 8E F7 00 88 88 88 88 88 87 F7 ................

0000B770 00 88 F8 88 88 E8 E8 87 00 F8 88 88 EF 88 87 87 ................

Reason: SpAsciiString Coverage: 46

0000B800 00 01 00 00 00 01 00 00 00 00 00 00 00 2B 4E 00 .............+N.

0000B810 05 31 4D 00 0B 35 4F 00 03 33 54 00 07 38 58 00 .1M..5O..3T..8X.

0000B820 0A 3D 5C 00 0B 40 5C 00 24 49 59 00 0E 45 63 00 .=\..@\.$IY..Ec.

0000B830 13 45 64 00 13 4A 67 00 1D 4D 65 00 15 4A 68 00 .Ed..Jg..Me..Jh.

0000B840 18 4E 6A 00 1B 52 6D 00 1E 55 71 00 24 53 6B 00 .Nj..Rm..Uq.$Sk.

0000B850 35 55 61 00 22 56 70 00 32 5E 71 00 2A 62 7F 00 5Ua."Vp.2^q.*b..

0000B860 36 63 79 00 6A 64 50 00 43 5A 66 00 4A 64 69 00 6cy.jdP.CZf.Jdi.

0000B870 50 66 6F 00 44 6B 7A 00 5B 6E 72 00 6E 76 6E 00 Pfo.Dkz.[nr.nvn.

Reason: AsciiStringOfDigits Coverage: 0C

0000BE80 52 51 50 45 40 3F 3F 3E 32 2C 2C 28 27 27 27 27 RQPE@??>2,,(''''

0000BE90 2C 2B 32 33 33 B9 E8 E4 E6 E6 E0 E3 F0 B8 68 7A ,+233.........hz

0000BEA0 25 04 55 10 00 00 00 00 00 C5 53 51 52 51 50 4E %.U.......SQRQPN

0000BEB0 4E 44 44 44 3F 3F 2C 28 28 2C 28 2C 2C 2B 33 33 NDDD??,((,(,,+33

0000BEC0 32 32 32 27 2C 36 EE EE E8 DA D0 BD BD CD 6A 7C 222',6........j|

0000BED0 64 04 15 10 00 00 00 00 00 A3 4D 4E 4E 45 44 44 d.........MNNEDD

0000BEE0 44 44 44 49 2C 32 2C 2C 32 32 33 33 32 33 32 2C DDDI,2,,2233232,

0000BEF0 32 2C 2C 32 43 2B B9 BD BD CF D0 CF E3 ED 76 7A 2,,2C+........vz

Reason: DecByteSeq Coverage: 20

0000CD80 D9 B0 AE AC A4 97 8E 85 83 3B CF 92 0E 4D 00 00 .........;...M..

0000CD90 00 00 00 00 00 00 00 00 FC FA E8 E8 EF F0 F0 EE ................

0000CDA0 E3 DA AE AC A4 94 8E 89 85 3E 98 B6 10 4F 00 00 .........>...O..

0000CDB0 00 00 00 00 00 00 00 00 DA FC F7 F7 EF EF E8 E8 ................

0000CDC0 E7 E2 DA DA AC A5 99 91 8F 84 84 7D 13 00 00 00 ...........}....

0000CDD0 00 00 00 00 00 00 00 00 00 FB FC FA FB FC FC FB ................

0000CDE0 FB F8 F1 E3 D9 99 8B 7A 25 1D 24 89 13 00 00 00 .......z%.$.....

0000CDF0 00 00 00 00 00 00 00 00 00 DF FF FF FC FA F4 E7 ................

Reason: SpPredictedByte Coverage: 05

0000D700 E1 D6 A5 00 E2 D9 AA 00 E3 DA AC 00 E4 DA AE 00 ................

0000D710 E5 DB B1 00 E6 DC B3 00 E6 DE B6 00 E7 DE B8 00 ................

0000D720 E0 D9 BD 00 E8 DF B9 00 E8 E0 BA 00 E9 E0 BE 00 ................

0000D730 A7 C4 D1 00 E2 DC C2 00 E3 DE C8 00 E0 DE DB 00 ................

0000D740 EA E2 C0 00 EA E4 C1 00 EB E4 C4 00 EC E4 C5 00 ................

0000D750 EA E4 CB 00 EC E6 C9 00 EE E7 CE 00 EE E8 CE 00 ................

0000D760 EE E8 D0 00 E9 E6 D9 00 EC E9 DF 00 F0 EA D2 00 ................

0000D770 F1 EA D4 00 F1 EC D7 00 F1 ED DA 00 F3 EE DC 00 ................

Reason: PredictedByteSeq Coverage: 80

0000DC80 F9 04 5A 3E 81 96 4F A0 E5 13 68 F9 04 5A 3E 81 ..Z>..O...h..Z>.

0000DC90 96 4F A0 E5 13 68 F9 04 5A 3E 81 96 4F A0 E5 13 .O...h..Z>..O...

0000DCA0 68 F9 04 5A 3E 81 96 4F A0 E5 13 68 F9 04 5A 3E h..Z>..O...h..Z>

0000DCB0 81 96 4F A0 E5 13 68 F9 04 5A 3E 81 96 4F A0 E5 ..O...h..Z>..O..

0000DCC0 13 68 F9 04 5A 3E 81 96 4F A0 E5 13 68 F9 04 5A .h..Z>..O...h..Z

0000DCD0 3E 81 96 4F A0 E5 13 68 F9 04 5A 3E 81 96 4F A0 >..O...h..Z>..O.

0000DCE0 E5 13 68 F9 04 5A 3E 81 96 4F A0 E5 13 68 F9 04 ..h..Z>..O...h..

0000DCF0 5A 3E 81 96 4F A0 E5 13 68 F9 04 5A 3E 81 96 4F Z>..O...h..Z>..O

Reason: AsciiByte Coverage: 2C

0000E700 6D 32 4B 4A AA AA 57 D4 AB AC 25 2B 10 A8 80 1C m2KJ..W...%+....

0000E710 66 55 E3 99 76 9D 1A 7B 0C 1A B2 E1 D5 77 46 AC fU..v..{.....wF.

0000E720 E9 3E E0 CD C6 E9 41 21 67 EA 41 38 A6 F7 61 4F .>....A!g.A8..aO

0000E730 E3 01 C9 28 2A FB 64 72 D0 7C 2A 88 13 4C 5A 8E ...(*.dr.|*..LZ.

0000E740 9D 15 74 74 D2 DC C0 8F E7 27 26 7F 59 B9 AC F6 ..tt.....'&.Y...

0000E750 E4 A6 ED 3B D5 6B 32 D4 40 13 15 AA 1A 83 0F 23 ...;.k2.@......#

0000E760 19 7A B8 FB C0 41 45 0B D2 E2 07 83 24 66 64 94 .z...AE.....$fd.

0000E770 94 56 82 28 8E 93 24 60 C7 72 17 95 BE AD 92 93 .V.(..$`.r......

Reason: ExtAsciiByte Coverage: 2F

0000F880 A9 79 99 A7 F6 39 BD 0D 9D FB 0D FC E6 99 1B 7F .y...9..........

0000F890 75 A2 D5 ED 08 35 60 F7 DF FC 93 2F 9F B8 EB BF u....5`..../....

0000F8A0 4E 07 47 86 EF 67 D8 C2 BE 85 E7 3A 74 3D 76 EF N.G..g.....:t=v.

0000F8B0 DD 97 45 DF 7A EF 9F DE BE E7 FE BF 0D 47 D5 E2 ..E.z........G..

0000F8C0 F4 EF FE F8 A7 D3 03 DF 19 5A 9F 53 56 56 C6 0E .........Z.SVV..

0000F8D0 44 86 12 20 A5 C3 11 59 D1 A3 41 E2 9B 49 12 48 D.. ...Y..A..I.H

0000F8E0 4A 6E 57 9D 95 9E C9 C6 9F B2 24 38 72 CE B8 AE JnW.......$8r...

0000F8F0 50 12 FB 38 B1 89 1E 88 03 AA A7 00 9D 88 0D 12 P..8............

Reason: WordMatch Coverage: 6D

00011000 B0 7E EF DB BE 6B F7 F2 DB B7 BD BA FB 9A F3 F8 .~...k..........

00011010 46 F2 3B D7 E6 E3 FE CF 95 E7 F7 94 FC 00 7E A7 F.;...........~.

00011020 E4 67 6A FE 1A FC 8E F7 77 6A FF A6 EC A7 08 C0 .gj.....wj......

00011030 9F F4 77 BC 3F 93 7E 34 8F F7 D7 09 40 8F F7 3F ..w.?.~4....@..?

00011040 85 64 A0 26 00 C6 F5 54 01 76 FC 6F 13 00 C1 CF .d.&...T.v.o....

00011050 1C 80 4D 00 5A FE 1F 50 04 A0 E5 FF 7E A1 F7 6F ..M.Z..P....~..o

00011060 8E 00 08 7E 43 00 71 20 00 5A CC FA 5D 12 B5 0E ...~C.q .Z..]...

00011070 A5 3F D8 02 B4 35 13 FC F3 57 EF 90 B0 D5 DB 25 .?...5...W.....%

Reason: SameByteSeq Coverage: 80

0001F880 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 ................

0001F890 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 ................

0001F8A0 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 ................

0001F8B0 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 ................

0001F8C0 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 ................

0001F8D0 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 ................

0001F8E0 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 ................

0001F8F0 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 ................

Reason: SpDecByteSeq Coverage: 3E

00023700 D8 CA 8A DF E9 DF BB 9E E2 DC C1 FF E0 D9 BC FF ................

00023710 DE D6 B6 FF DB D3 AE FF D9 CF A8 FF D7 CD A0 FF ................

00023720 D4 C8 96 FF D2 C5 8F FF CD C0 83 FF B5 A6 68 FF ..............h.

00023730 EC E9 DF FF 61 84 91 FF 00 00 00 00 00 00 00 00 ....a...........

00023740 DA CD 8F BC E5 DC B2 AF F0 EA D2 FF EE E8 CD FF ................

00023750 ED E6 C7 FF E9 E2 C0 FF E7 DF B7 FF E4 DB AF FF ................

00023760 E1 D7 A6 FF DF D3 9D FF DB CD 91 FF C2 B3 73 FF ..............s.

00023770 ED E8 D0 FF 61 84 91 FF 00 00 00 00 00 00 00 00 ....a...........

Reason: SymmetricByteSeq Coverage: 12

00023900 10 00 01 00 04 00 68 06 00 00 01 00 20 20 10 00 ......h..... ..

00023910 01 00 04 00 E8 02 00 00 02 00 18 18 10 00 01 00 ................

00023920 04 00 E8 01 00 00 03 00 10 10 10 00 01 00 04 00 ................

00023930 28 01 00 00 04 00 30 30 00 00 01 00 08 00 A8 0E (.....00........

00023940 00 00 05 00 20 20 00 00 01 00 08 00 A8 08 00 00 .... ..........

00023950 06 00 18 18 00 00 01 00 08 00 C8 06 00 00 07 00 ................

00023960 10 10 00 00 01 00 08 00 68 05 00 00 08 00 00 00 ........h.......

00023970 00 00 01 00 20 00 58 19 01 00 09 00 30 30 00 00 .... .X.....00..

Reason: SpIncByteSeq Coverage: 2F

00024100 8D 3E 93 3E 99 3E 9E 3E B7 3E BD 3E C7 3E CD 3E .>.>.>.>.>.>.>.>

00024110 D3 3E E7 3E EC 3E F2 3E F8 3E FE 3E 09 3F 10 3F .>.>.>.>.>.>.?.?

00024120 1A 3F 20 3F 31 3F 39 3F 4F 3F 55 3F 63 3F 6E 3F .? ?1?9?O?U?c?n?

00024130 94 3F 9A 3F A3 3F A9 3F B3 3F C7 3F D0 3F E7 3F .?.?.?.?.?.?.?.?

00024140 F2 3F F9 3F 00 20 00 00 60 01 00 00 04 30 11 30 .?.?. ..`....0.0

00024150 25 30 2C 30 43 30 51 30 56 30 80 30 89 30 94 30 %0,0C0Q0V0.0.0.0

00024160 9C 30 B2 30 B9 30 BF 30 C6 30 CC 30 D2 30 DB 30 .0.0.0.0.0.0.0.0

00024170 E1 30 F3 30 FF 30 04 31 0E 31 1D 31 25 31 31 31 .0.0.0.1.1.1%111

Reason: AsciiStringOfSpecial Coverage: 1F

00024A80 B8 39 BE 39 C4 39 CA 39 03 3A 09 3A 11 3A 27 3A .9.9.9.9.:.:.:':

00024A90 2D 3A 33 3A 3A 3A 41 3A 52 3A 5B 3A C5 3A FA 3A -:3:::A:R:[:.:.:

00024AA0 07 3B 1F 3B 45 3B F6 3B 3A 3C 40 3C 5C 3C 72 3C .;.;E;.;:<@<\<r<

00024AB0 8B 3C B3 3C D7 3C DD 3C F9 3C 35 3D 40 3D 4E 3D .<.<.<.<.<5=@=N=

00024AC0 6B 3D 97 3D AA 3D C5 3D D5 3D DB 3D E3 3D F7 3D k=.=.=.=.=.=.=.=

00024AD0 27 3E 3A 3E 66 3E 84 3E 8B 3E 91 3E 97 3E 9E 3E '>:>f>.>.>.>.>.>

00024AE0 A5 3E B0 3E BE 3E C7 3E CE 3E D9 3E E7 3E EC 3E .>.>.>.>.>.>.>.>

00024AF0 F2 3E F9 3E 1B 3F 24 3F 2F 3F 3D 3F 43 3F 49 3F .>.>.?$?/?=?C?I?